Introduction #

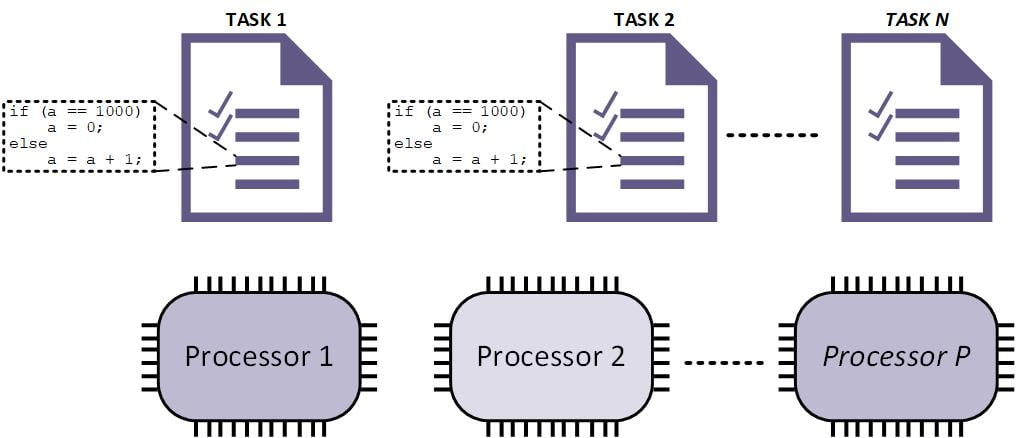

In real-time operating systems (RTOS), synchronization mechanisms, critical sections, and mutexes play a crucial role in managing shared resources and ensuring task safety. For example, let us consider the system depicted in Figure 1.

In this system, two or more tasks execute the following code to record, for example, the number of times the tasks are executed, resetting it to 0 when the count reaches the value of 1000.

if (a == 1000)

a = 0;

else

a = a + 1;The variable ‘a’, which stores the count, must have synchronized access as it is shared among multiple tasks. When it is not synchronized, a critical race condition can occur. For example, tasks 1 and 2 check that variable ‘a’ is equal to 999, and consequently, both may increment the value of variable ‘a’, potentially resulting in it reaching the value of 1001*. As a result, the code will increment the variable beyond the end of its range in future invocations.

* NOTE: Depending on how the code is compiled and the volatility of variable ‘a’, it may end up with the value 1000 or 1001. To synchronize access to variable ‘a’ and mitigate the risk of critical races, semaphores are proposed, along with critical sections in uniprocessor systems.

Semaphore, a synchronization mechanism #

A semaphore is a synchronization primitive used to control access to a shared resource by multiple tasks. It maintains a count to track the number of available resources. GeMRTOS provides operations such as gu_sem_wait() and gu_sem_post() to acquire and release resources, respectively.

In the code of the previous example, access to variable ‘a’ can be synchronized using a semaphore as follows:

gu_sem_wait(sem_a, G_TRUE);

if (a == 1000)

a = 0;

else

a = a + 1;

gu_sem_post(sem_a);In this way, the operation <strong>gu_sem_wait(sem_a, G_TRUE)</strong> ensures that the subsequent operations will be executed when the task grants the semaphore <strong>sem_a</strong>. The parameter <strong>G_TRUE</strong> indicates that the operation blocks, waiting for access to the semaphore <strong>sem_a</strong>. At the end of the code, access to the semaphore is released using the operation <strong>gu_sem_post(sem_a)</strong>.

NOTE 1: A semaphore ensures that no other task will access the shared resource while a task grants it. However, it does not guarantee that the code may be preempted while it is executing. That is, the task granting the semaphore can be preempted from execution by another task with higher priority.

NOTE 2: In uniprocessor systems, critical sections are used as synchronization mechanisms, but this synchronization methodology is not valid in multiprocessor systems.

Critical Section (a synchronization mechanism only for uniprocessor systems). #

A critical section is a portion of code that must be executed atomically to prevent critical race conditions and data corruption. In uniprocessor RTOSs, critical sections are often implemented by disabling processor interrupts. Because there exists only one processor that executes task, when processor interrupts are disable, then it is forced to execute the task code section atomically.

ENTER_CRITICAL_SECTION; // disable processor interrupts

if (a == 1000)

a = 0;

else

a = a + 1;

EXIT_CRITICAL_SECTION; // enable processor interruptsIt should be noted that in a uniprocessor system, this mechanism, in addition to enabling atomic execution of the code section, excludes the execution of code from other tasks, at least until the critical section ends and processor interrupts are re-enabled.

In a multiprocessor system, disabling processor interrupts allows for atomic execution, but does not prevent critical race conditions and data corruption. Consider the following code:

while (a != GRTOS_CMD_PRC_ID) {

if (a == 0) a = GRTOS_CMD_PRC_ID;

}

// Next code is executed by processor GRTOS_CMD_PRC_ID

…

a = 0; // end code executionThe purpose of this code excerpt is to guarantee that the subsequent code is executed by the processor identified by the value stored in the variable ‘a’. However, if two processors execute this code concurrently in separate tasks, both may encounter the scenario where ‘a’ equals zero. As a result, both processors will update ‘a’ with their IDs, leading to the execution of the subsequent code by both processors simultaneously.

This simultaneous execution can potentially lead to critical race conditions and data corruption. Merely introducing a critical section will not prevent such simultaneous execution.

To avoid this, a semaphore is utilized, as demonstrated in the preceding section:

while (a != GRTOS_CMD_PRC_ID) {

gu_sem_wait(sem_a, G_TRUE);

if (a == 0) a = GRTOS_CMD_PRC_ID;

else {

gu_sem_post(sem_a);

}

}

// Next code is executed by processor GRTOS_CMD_PRC_ID

// granting semaphore sem_a

…

a = 0;

gu_sem_post(sem_a); // end code executionIn this scenario, semaphore ‘sem_a’ serves to synchronize access to the comparison and assignment code; however, it does not ensure atomic execution. Consequently, the following sequence of events may occur:

- A task, being executed by processor 1, accesses semaphore ‘sem_a’.

- Upon inspection, it finds that variable ‘a’ is zero.

- The task proceeds to assign the value 1 (the ID of the current processor) to variable ‘a’.

- Subsequently, the task is preempted by a higher-priority task.

- The execution of the task is then resumed, but by processor 2 this time.

- As a result, the task releases the processor, but encounters an indefinite loop as variable ‘a’ retains the value 1, which does not align with the ID of the processor currently executing the task (processor 2).

Synchronization with Critical Sections #

As previously explained, merely disabling processor interrupts does not ensure exclusion in the execution of critical sections within multiprocessor systems. Furthermore, interrupt disabling can disrupt the execution of operating system services, potentially leading to priority inversion, including the execution of the operating system kernel. Therefore, it is imperative to exit the critical section if the resource cannot be obtained to prevent blocking other tasks and the operating system due to the disabling of processor interrupts.

The following code exemplifies the combined use of semaphores and critical sections to prevent critical race conditions and achieve atomic execution without excessively blocking the system.

ENTER_CRITICAL_SECTION; // disable processor interrupts

while (a != GRTOS_CMD_PRC_ID) {

gu_sem_wait(sem_a, G_TRUE);

if (a == 0) a = GRTOS_CMD_PRC_ID;

else {

gu_sem_post(sem_a);

EXIT_CRITICAL_SECTION; // enable processor interrupts

}

ENTER_CRITICAL_SECTION; // disable processor interrupts

}

// Next code is executed by processor GRTOS_CMD_PRC_ID

…

a = 0;

gu_sem_post(sem_a); // end code execution

EXIT_CRITICAL_SECTION; // enable processor interruptsThe code, while complex and of questionable utility, is designed to distinguish between the different mechanisms and consequences that must be considered in multiprocessor systems and to avoid practices used in uniprocessor systems that are not feasible in multiprocessor systems.

Synchronization and atomicity in code execution may be implemented using mutexes. It is easily possible to incorporate mutexes in hardware using the Intel’s Platform Designer development tool.

Mutex #

A mutex (short for mutual exclusion) is a synchronization primitive that provides exclusive access to a shared resource. It allows only one thread or task to acquire the mutex at a time, preventing concurrent access by other threads. Mutexes are typically used to protect critical sections of code and ensure data integrity.

The basic operations of a mutex are:

- Locking: Also known as acquiring or taking the mutex, this operation grants exclusive access to the shared resource if available. If the mutex is already locked by another thread, the current thread may block or spin until the mutex becomes available.

- Unlocking: Also known as releasing or giving up the mutex, this operation allows other threads to acquire the mutex and access the shared resource.

GeMRTOS implements an advanced mutex to safeguard all access to GeMRTOS’s data structures and code:

GRTOS_USER_CRITICAL_SECTION_GET: locks the GeMRTOS’s mutex.

GRTOS_USER_CRITICAL_SECTION_RELEASE: unlocks the GeMRTOS’s mutex.

The GeMRTOS mutex could be used when accessing unsafe multiprocessor functions, such as newlib functions.

NOTE: When using GeMRTOS’s mutex in user tasks, it is crucial to consider that when the mutex is locked, all GeMRTOS functions are suspended until the mutex is unlocked and becomes available for GeMRTOS operations again. For example, it should not be used when accessing a slow input/output device.

Scheduling List Exclusion #

GeMRTOS introduces a novel mechanism known as scheduling lists. These lists facilitate the assignment of tasks and processors, ensuring they are scheduled based on the designated priority discipline. Each scheduling list, alongside various other parameters, allows for the configuration of exclusion, which dictates the maximum number of tasks assigned to the list that can execute simultaneously.

By setting the exclusion parameter to a value of 1, tasks are scheduled as if operating within a uniprocessor system. Consequently, the critical section executes code atomically, effectively preventing critical race conditions among all tasks assigned to the same scheduling list.

Conclusions #

In a system with multiple tasks and processors, it is crucial to synchronize and atomicize the execution of code sections to prevent critical race conditions and data corruption. In uniprocessor systems, disabling processor priorities inherently includes exclusion because it is the only processor in the system. In multiprocessor systems, ensuring atomicity in execution and synchronizing resources may necessitate the concurrent use of semaphores and critical sections, or the exclusive use of mutexes.

GeMRTOS provides a comprehensive solution for the secure implementation of applications. It offers semaphores, primitives for critical sections, the GeMRTOS mutex, and the ability to define scheduling lists with processor exclusion. Through this latter option, all tasks that may cause critical races can be assigned to the same scheduling list, and processor exclusion can be set for the scheduling list with a value of 1. In this way, all tasks in that list will be scheduled as if they were in a uniprocessor system.